Human Action Photorealistic and Point-light Videos (HAPPV)

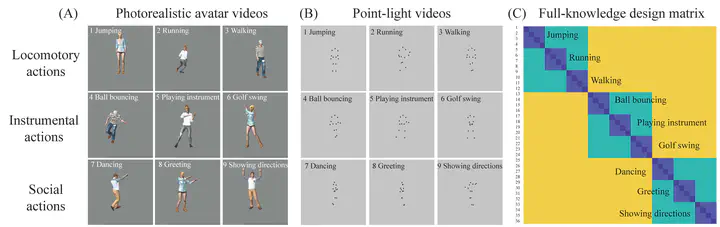

HAPPV includes photorealistic and point-light videos of 36 human actions:

Action stimuli used in the experiment were generated from the Carnegie Mellon University Motion Capture Database (https://mocap.cs.cmu.edu).

As in (Dittrich, 1993), action categories were grouped into three semantic classes: Locomotory action (jumping, running, and walking), Instrumental action (ball bouncing, playing an instrument, and golf swing), and Social action (dancing, greeting, and showing directions)

Citations:

[1] Yujia Peng, Xizi Gong, Hongjing Lu, Fang Fang; Human Visual Pathways for Action Recognition Versus Deep Convolutional Neural Networks: Representation Correspondence in Late But Not Early Layers. J Cogn Neurosci 2024; doi: https://doi.org/10.1162/jocn_a_02233

To download, please fill out this form: https://www.wjx.cn/vm/OtRRPQ9.aspx#